Software is often the biggest part of embedded projects today. As processors have become more powerful and less costly, large amounts of hardware have migrated inside the chip; this has left the software team with a bigger job than the hardware designer. Faster processors with more memory resources are facilitating a migration away from “bare-metal” towards hosted environments for applications.

Software is often the biggest part of embedded projects today. As processors have become more powerful and less costly, large amounts of hardware have migrated inside the chip; this has left the software team with a bigger job than the hardware designer. Faster processors with more memory resources are facilitating a migration away from “bare-metal” towards hosted environments for applications.

A bare-metal environment is one in which the development team must write everything from scratch; here there is no OS. It works spectacularly well until 32-bit processors are encountered. It’s not really the number of bits of addressability or the width of the data bus that imposes an OS but if, for example, a colour LCD is being used instead of a monochrome character LCD, an OS with display drivers can shorten the design cycle very nicely.

The hosted (RT)OS environment is much more resource hungry and not as efficient, so what’s the trade-off? Availability of code, mind-share, and yes, an easier faster development cycle. That said, it’s unlikely anybody will need linux for an 8-bit processor controlling a rice-cooker. Everything has its place, but the bigger projects have become easier to work on with so many OS options available.

Before facing the question of bare-metal vs. hosted, some fundamental questions should be considered:

- Is a firmware solution a better fit? If so, a programmable logic device (PLD), or a programmable gate array is the way to go. The nature of designs using gate arrays is such that unlike software, many things can, and do, happen simultaneously. The fastest possible solution is usually achieved with this approach.

- Would a microcontroller or DSP based solution do in place of the above hardware solution? This shifts the language from hardware descriptive simultaneously executed to software descriptive single-thread executed, a la assembly language, C, C++, or all of the above. The down-side is that execution is linear, in that only one instruction can be executed at any given time. In the case of a DSP, several operations can happen simultaneously, however, what is written is still software, as there is a thread of execution being sequentially run by a processor. In the former case (of the FPGA), there is no program counter stepping through instructions, and instead data is processed in real-time. The upside to the processor based approach is that the code can be maintained more easily, and development can in many cases be less expensive. On a more hardware-ish note, processors are much less hungry for power than FPGAs.

- Does the application require extensive computation, or is it limited to simple math such as addition, subtraction, and bit shifting? If the answer yes to extensive computation, a DSP may be better suited. If, on the other hand, the application does not require more than simple arithmetic and doesn’t require a large data space, a microcontroller will be a better fit. Microcontrollers come out the winner in a surprising number of applications. They are cheaper in their own right, plus they tend to be less expensive to develop with. They also seem to have captured the lion’s share of development energy, meaning the choices are many.

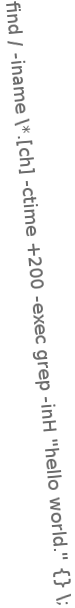

An illustration of low-level firmware code is shown below. The example depicts design expertise typically employed in our development cycle.

LRXRATE ;Generate counter values for bulk transfer mode. btfsc _bulkrateflag ;If the bulk channel flag was set, goto LRXSTARTSTOP ;then we come here. This should ;be the sample-rate setting byte. movf _rxholdreg,w sublw _maxtxrate ;If request out of bounds, reject. btfss _c ;---bit set if result is positive. goto LRXDATAEND movlw HIGH(LRXRATE) ;Setup pclath register for computed movwf _pclath ;goto. bcf _c ;Ensure when we rotate, the lsb rlf _rxholdreg,w ;is clear. andlw _lonibble >> 1 ;Mask off the three lsbs. addwf _pcl,f movlw ((_adcsr * 3) / 1) goto LRXSTORERATE movlw ((_adcsr * 3) / 2) goto LRXSTORERATE movlw ((_adcsr * 3) / 5) goto LRXSTORERATE movlw ((_adcsr * 3) / D'10') LRXSTORERATE movwf _bulkrate bsf _bulkrateflag return

A block of code from an embedded control application.

The code is written for a Microchip PIC processor. Comments are detailed enough that it may be interpreted without further reading. Documentation, as always, is critical to the success, portability and maintainability of firmware developed for any project.

The above code shows a configuration function for an embedded control application. In the case shown, when this code executes, a decision as to whether valid data is present is made, followed by verification of whether or not the data is within bounds of what is expected. If all is well, the result is manipulated into a program branch offset. Once this has been done, the value offset is added to the program counter, and the branch is thus ‘taken’. When the destination of the branch is reached, the desired value for the original calling function is loaded into the processor’s accumulator, and a second branch is unconditionally taken to an exit point for the block. At the exit point, the freshly obtained results are stored, and the program returns to the main thread of execution.

The reader may notice that a little higher-level math appears to be taking place in the operand of some of the assembly language instructions. This arithmetic is computed at assembly time. The code that is actually programmed into the device will have a fixed number in place of the computation. The result of the above methodology is that the code is highly portable, and can easily be moved between processors in the Microchip family. A simple re-compile is all that is required to accommodate changes in processor clock-speed as well.

J-Tech Engineering is available to discuss any special coding requirements for your products. Please see the company information page for contact information.